In today's rapidly evolving AI landscape, large language models (LLMs) have become increasingly sophisticated in their ability to understand and generate human-like text. However, these models face a significant limitation: they operate in isolation from the real world, unable to access up-to-date information or interact with external systems without custom integrations. This is where the Model Context Protocol (MCP) comes in—a groundbreaking open standard that bridges the gap between AI models and the external tools and data sources they need to be truly useful.

In this comprehensive guide, we'll explore what MCP is, why it matters for AI development, how to implement it in your projects, and examine real-world examples that showcase its transformative potential.

Understanding Model Context Protocol (MCP)

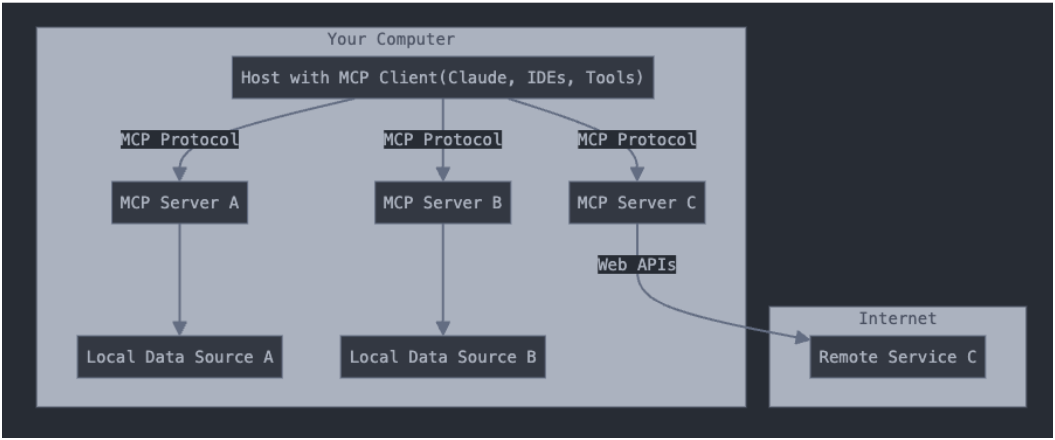

A high-level architectural diagram below shows how a host process (like Claude or a local agent) interacts with multiple MCP servers to access both local and remote data:

What is MCP?

The Model Context Protocol (MCP) is an open standard developed by Anthropic that enables seamless integration between AI models and external data sources, tools, and systems. MCP standardizes how applications provide context to LLMs, offering a unified approach to connecting AI models with the vast ecosystem of data sources and tools.

Think of MCP as a "USB-C for AI integrations"—a universal adapter that allows any compliant AI application to interact with any compatible data source or service without requiring custom code for each connection. This standardization eliminates the fragmentation of maintaining separate integrations for each data source or tool, dramatically reducing development time and complexity.

The Architecture of MCP

Here's a visual representation of how MCP works: